This article explains the principles of stereoscopic imaging, the technologies used to create and view stereoscopic images on PCs, their evolution, and their impact on game development. It also highlights important aspects for developers planning to add stereoscopic support to their games.

My experience in developing stereoscopic drivers allows me to cover this topic not as an outside observer but as someone directly involved in the field.

Stereoscopic images are two images created separately for each eye, simulating the difference in the viewing angle between the two human eyes.

The same object in the image for the left eye is shifted relative to its position in the image for the right eye. The value of this shift is called horizontal parallax. Parallax naturally occurs when we look at a three-dimensional object with our eyes, but it can also be created artificially.

When such artificially created images for different eyes are processed by the visual cortex, it interprets the differences between them as perceived depth, creating a sense of three-dimensionality.

Creating the Stereoscopic Images

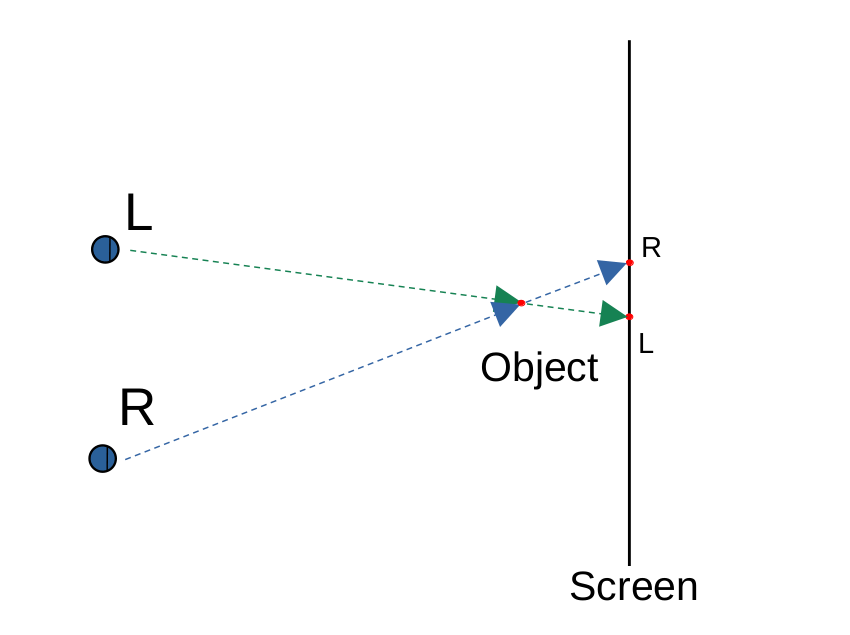

Stereoscopic images are created based on the principle shown in Figure 1. There is a screen, the surface of which is the point of zero parallax (objects on the screen surface are positioned the same for both the left and right eyes). Suppose we need to display an object located behind the screen. We draw lines from the eyes to the object.

The points where these lines intersect with the screen surface are the points where the object should be located in the images for the left and right eyes, respectively, to form a correct stereoscopic image. Note that the object in the image for the left eye is positioned to the left of its position in the image for the right eye. The further the image is from the screen, the greater the parallax. Images at a significant distance will be separated by the distance between the centers of the eye pupils, typically around 6 cm.

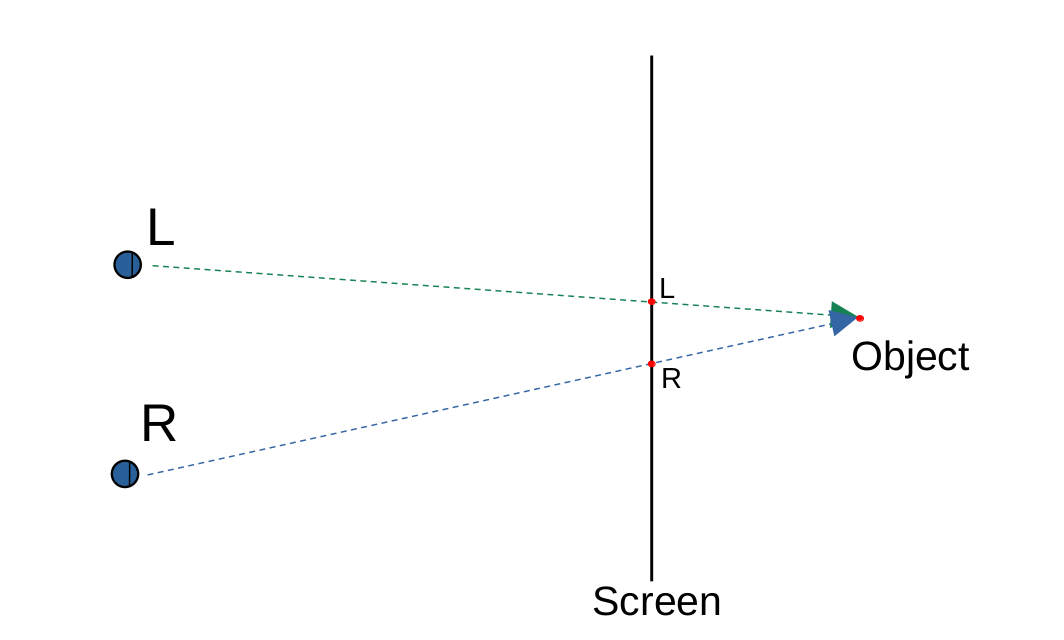

But what if we need to display an object located in front of the screen? This is also possible (see Figure 2). We draw lines from the eyes to the object and then extend them to the screen surface. Again, the points where these lines intersect with the screen surface are the points where the object should be placed in the images for the left and right eyes.

In this case, however, the object in the image for the left eye is positioned to the right of its position in the image for the right eye.

The parallax value becomes negative, and the closer the object is to the eyes, the greater its value. Game developers should be aware that clipping objects behind the screen appears natural (like a window cutting off part of the view outside). However, clipping objects in front of the screen should be avoided, as it looks uncomfortable and unnatural to the brain, quickly leading to fatigue.

So, we have figured out how to create correct stereoscopic images. But how can we show two images to the eyes so that each eye sees only its own image?

Various technologies have been used for this purpose, with their popularity changing over time and with technological advancements.

Stereoscopic Image Technologies

Anaglyph 3D

Historically, the first technology was anaglyph 3D — a method of separating images for each eye using different colored filters, usually red and cyan. This technology appeared long before the advent of computers. When a viewer looks through glasses with colored filters at images where the left eye’s image (with the red filter) lacks cyan color and the right eye’s image (with the cyan filter) lacks red color, a stereoscopic effect occurs.

The drawbacks of anaglyph 3D include color distortion due to the filters, reduced brightness, and insufficient image separation. The latter occurs because the inexpensive mass-market red filter partially allows cyan light through, and vice versa, the cyan filter partially allows red light through. Therefore, prolonged viewing with anaglyph glasses can cause eye strain and discomfort.

Polarization Filters

Another technology worth mentioning is the polarized 3D system with polarization glasses. This technology was widely used in cinemas, but its application on PCs was limited due to the need for specialized equipment. Initially, vertical polarization filters for the left eye and horizontal polarization filters for the right eye were used along with two synchronized projectors, each with its own type of polarization.

This method achieves a stereoscopic effect without the color distortions typical of anaglyph technology. However, head tilting causes image mixing for different eyes, so modern cinema projection systems often use circular polarization.

Active Shutter Glasses 3D

The most widely used technology for regular monitors was active shutter glasses. In this method, images for the left and right eyes are alternately displayed at high speed. The glasses used in this system have liquid crystal shutters that change from transparent to opaque and are in sync with the images on the screen. The shutters in the glasses alternately block the light for one eye while the other eye sees the image, creating a sense of three-dimensionality.

Active shutter systems require precise synchronization between the glasses and the display, using wireless technologies such as infrared or Bluetooth. These systems are widely used in projectors, televisions, and PC monitors. For comfortable viewing, a high refresh rate of at least 120 Hz is required, allowing each eye to see 60 frames per second to avoid noticeable flicker.

Virtual Reality (VR)

Virtual reality technology is the leader of our times. A VR system includes a headset with built-in displays and lenses, motion-tracking sensors, and controllers for interaction. VR headsets can connect to a PC or operate independently. Typically, VR headsets use a single display split into two halves, with each eye seeing only its part. Lenses in VR headsets change the focal distance of the display, which is very close to the eyes, making it appear at a more comfortable viewing distance of 1 to 2 meters.

Additionally, the lenses expand the field of view to cover a larger portion of peripheral vision, creating a sense of full immersion in the virtual environment.

Timeline of Stereoscopic Image Technologies Spread on PC

Let's consider how the popularity of technologies changed and how they were practically used on PCs. In the early 2000s, shutter glasses technology on CRT monitors was dominant. But how do you link the monitor's image to the glasses? Graphics cards of that time couldn't alternately display images for each eye at a high enough frequency.

A simple solution was used: a frame was created with the top half containing the image for the left eye and the bottom half for the right eye. A special device called a VGA converter was connected between the graphics card and the monitor, doubling the monitor's scan rate to alternately display the top and bottom halves of the frame on the entire screen while simultaneously sending a signal to the shutter glasses to close the appropriate eye.

One of the most well-known and widely used solutions in those years was Metabyte's Wicked3D EyeSCREAM. This product supported many DirectX and OpenGL games without requiring special game adaptation.

Thus, the cost of three-dimensionality was a two-fold reduction in vertical resolution. A similar principle was used for Wicked3D EyeSCREAM Light for anaglyph: the left eye's image was formed in even lines, and the right eye's in odd lines. Only this way could acceptable performance be achieved on the graphics cards of that time.

Below is an example of a two-dimensional image and the same scene in anaglyph 3D.

Anaglyph 3D was the most budget-friendly way to experience 3D technology. But only a few computer games, such as "Quake II" and "Serious Sam," had built-in support for this technology. Therefore, for those who wanted to try anaglyph 3D in other games, there was no alternative to Wicked3D EyeSCREAM Light, which supported most OpenGL games of that time. Several viewers could watch anaglyph 3D simultaneously, making it convenient for shared use.

Then, there was a temporary decline in interest in shutter glasses. Interestingly, this happened due to the spread of LCD monitors. Yes, because of the same liquid crystals used in shutter glasses. This occurred because LCD monitors did not support refresh rates above 60 Hz for a long time.

Only after nearly a decade did LCD monitors with refresh rates of 120 Hz and higher appear, and with the release of Nvidia's 3D Vision® kit in 2008, shutter glasses technology had a resurgence. Improved graphics card performance and the implementation of 3D functionality by the graphics card manufacturer ensured broad support for various games. No VGA converter was needed, and there was no reduction in vertical resolution. The golden age of shutter glasses came in 2012, with many TV models including them.

However, just a few years later, interest declined, and in 2019, Nvidia discontinued support for 3D Vision® in its drivers. This decline was again due to advances in LCD technology. The mass production of high-resolution, high-quality phone screens made these screens cheaper and more accessible, and the requirements for VR headset screens were similar.

Early VR headsets suffered from low resolution, but the resolution of 800x600 pixels can't be seriously compared to the 2064 x 2208 pixels of the Meta Quest 3. Not only resolution but also brightness and color reproduction improved. It’s no wonder that VR headsets are now so popular.

Software Aspects

Returning to the software side, the situation for game developers has become more complex in some ways and simpler in others. Previously, developers of active shutter glasses products, such as Metabyte, were responsible for game compatibility with their kits. During the reign of Nvidia 3D Vision®, developers only needed to check their game's compatibility.

The graphics card driver handled stereoscopic image creation, and game developers only had to ensure the correct placement of flat objects on the screen surface—for two-dimensional images, this is insignificant, but for stereoscopic images, such objects might appear in front of or behind the screen if depth is not set properly.

As for VR headsets, explicit support from developers is required. This makes sense, as a headset is not only a screen but also sensors that determine the camera's position and orientation. Thus, modern VR development requires using special SDKs that standardize the process of creating applications and games for various VR systems. The most popular among them are:

- OpenVR: Developed by Valve, OpenVR is a universal platform supporting multiple VR devices. It allows developers to create content compatible with various headsets, including HTC Vive and other SteamVR-supported devices. OpenVR provides access to motion tracking and device control through a unified API.

- Oculus SDK: This SDK from Oculus (owned by Facebook) is designed to develop applications for Oculus Rift and Oculus Quest headsets. Oculus SDK offers tools for motion tracking, stereoscopic image rendering, and controller management.

- Windows Mixed Reality: Microsoft offers Windows Mixed Reality, its platform for developing VR and AR applications. This platform supports various headsets from different manufacturers and provides a rich set of tools for developing and integrating VR content into the Windows ecosystem.

Developers must create stereoscopic images themselves. For example, when using OpenVR in combination with DirectX, the process usually involves the following steps:

- Initializing OpenVR (vr::VR_Init) and checking if a VR headset is connected.

- Creating textures (CreateTexture2D) and render targets (CreateRenderTargetView) for each eye.

- Rendering the scene for each eye.

- Submitting the textures with the images to the VR headset:

vr::VRCompositor()->Submit(vr::Eye_Left, &leftEyeTexture);

vr::VRCompositor()->Submit(vr::Eye_Right, &rightEyeTexture);

However, in practice, things are simpler, as popular game engines like Unity and Unreal Engine support integration with the above VR SDKs. This significantly eases VR content development and allows game developers to focus on the creative aspects of their projects.

Conclusion

Stereoscopic imaging technologies on PCs have come a long way to modern VR systems. Acknowledging the pioneering contributions of companies like Nvidia and Metabyte to developing these technologies is essential. Their innovations paved the way for today's VR solutions.

Enthusiasts and industry professionals continue to closely monitor new developments, such as the Apple Vision Pro, which have sparked renewed interest in VR and are likely to lead to new products. Even more realistic VR systems that will transform interaction with the digital world are expected in the near future.