TL;DR Summary

- I met a classmate in a parking lot and bonded over a lost $10 bill. What I didn’t know was that my classmate had built a model that translated baby cries and was looking for a co-founder. Thanks to Dr. Peter Scheurman’s Venture Lab, we were able to meet as business partners, have a place to work, and launch our company.

- In a few weeks, I built a server around the model and an Android app that parents could use to translate their baby’s wails. But the latency was terrible — no one wants to wait for a server to spin up or to upload audio files while holding a crying child.

- So, we decided to put the model in the app, but it was too large to fit. This meant shrinking its size through quantization without noticeably reducing accuracy and figuring out how to generate the inputs the model required on the device without crashing the app. We couldn’t have done it without Pete Warden, who gave us invaluable TensorFlow, Android, and startup advice.

- But now that the model was on the device, it was beyond our reach. How do we measure its performance? What does success look like? I built a layer of logging, monitoring, and user feedback around the model to capture all the information needed to judge performance. This data created a flywheel; every translation created new labeled training data — we were now growing our dataset with minimal effort.

- Once the app was working and being monitored, we had a dilemma: show one or many translations? Instinct says to show the most accurate translation—that’s the one that is most likely to be correct, after all. But what if the translation is only 70% confident? What about 60% or 50%? This was a subtle problem that helped us see how people actually used the app and discovered a killer feature.

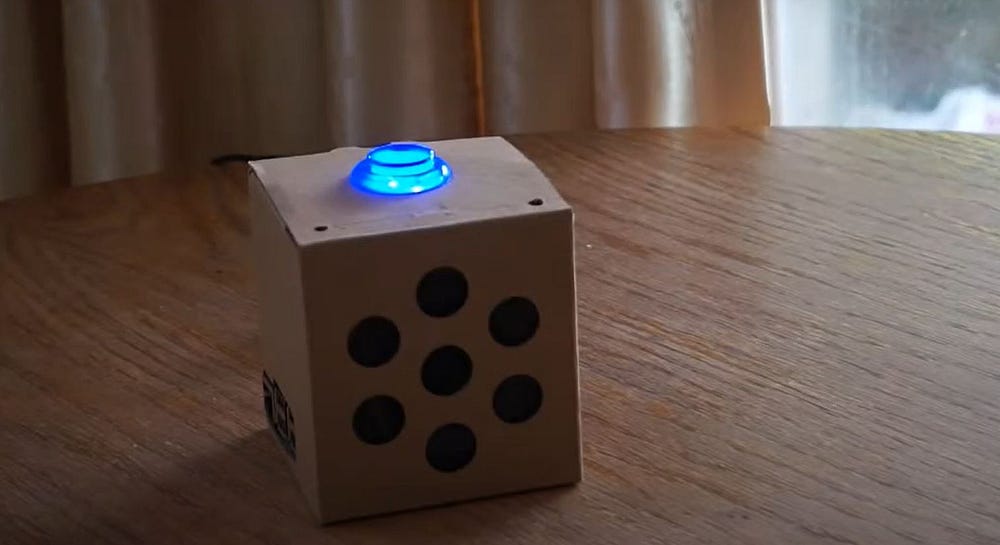

- It turns out it is very hard to find your phone and open the app while your child is crying in your arms. So, we made a baby monitor, Homer, using a Google Home smart speaker — hand’s free translations.

- However, the smart speaker wasn’t enough to save us. We ran out of money and steam, life circumstances drove us back home, and our baby translator was put on the shelf.

Preface

I started working on an AI feature for work because I saw an opportunity to bypass a clunky, old expert tool, and of course, every start-up needs to be an AI start-up. While prototyping this feature, I encountered a few oddly familiar problems. So I looked through my journals and found Maggie, the baby translator a friend and I built pre-pandemic. It has been almost seven years, and the same problems that plagued AI testing and production deployment are still unmitigated, although the state of the art has made significant progress. Here is a reflection on my notes, thoughts, and code from Maggie to demonstrate the common problems on the way to productionizing AI. I hope you find it helpful.

What is Maggie?

Maggie is the name of the youngest child of the Simpsons from the show of the same name. Maggie is a baby who hasn’t learned to speak yet. Maggie’s uncle, Herb, hits rock bottom after a poor business decision and moves in with the Simpsons. He miraculously builds a working baby translator after drawing inspiration from his niece and the family’s frustration with her lack of speech. In a similar fashion, the idea of building a baby translator came to my friend Chris from his relationship with his baby sister, who had trouble picking up speech. Hence, we named our app Maggie.

Chris was working at a baby lab at the time, and he figured out there were physiological limitations to the spectrum of sounds children within a certain age range could make. From this insight, he gathered training data and built a classification model for baby cries — an impressive feat, as he was a Physics major with no CS experience.

We shared one class — Engineer Service Learning — where the entire class worked on the LEED certification for one of the buildings on campus. I remember him as the happy-go-lucky guy who rode a longboard into class. Our first interaction was outside the classroom, in the dark parking lot of a grocery store, where I saw him gearing up to ride his dirt bike. He had parked next to my car, and we talked. Then I noticed some crumpled-up paper next to him, picked it up, noticed it was money, and told him he had dropped his money. He said it wasn’t his, but I told him to keep it. We didn’t know how much it was; the parking lot was too dark. $1, $10, or $100. I told him I hoped it was $100. It was $10. But that first interaction built enough trust between us that in a few weeks, while we were both working at the Venture Lab — the startup incubator at UC Merced — he asked me to build an app to deploy his baby translator. Without the Venture Lab, we would have never thought of collaborating. I certainly didn’t go around telling random classmates that I build Android apps, nor did he go around bragging about his baby translation model. There’s a magnetic magic to in-person third spaces you shouldn’t undervalue.

I have a model, and it works. Now what?

Despite building a working model. Chris could not figure out how to deploy it. This was early 2017, and there were not many courses or guides demonstrating this. His mentor at TensorFlow recommended deploying on Android, but learning mobile development was a bridge too far. I knew Android and had deployed multiple apps on the Play Store, but even for me, this was a stretch. So, I did the easy thing.

I put the model on a server and made API calls to it. Executing the model in a Flask server was surprisingly easy: import TensorFlow, load the model from the local disk (I didn’t even think about deploying the model in a way it would be easy to version until later), and point the requests to it. But there were some gotchas:

- The request had to be transformed into the input the model was expecting, and we did not create a shared package to do this work. Since Chris trained the model and I created the server, this became a source of errors.

- The first request was always slow; we were hosting on AppEngine, and I had left the number of servers idle to zero (the default) because I’ve never worked with a server that required such a long setup. Why spend money on idle servers when it only took a second to start new servers? Getting the model loaded and running took a long time, so we had to have our servers on idle 24/7 . You don’t know when a baby is going to start crying, which means a higher hosting bill.

- This slow start made integration testing a big headache, to the point that I fell back on manual tests. Waiting minutes for the tests to pass was unbearable. It was faster to spin up the server with hot-reload and manually test before each commit.

- Deploying and version control was tricky. Initially, I had deployed the model with the repo because I wanted some form of version control, but I quickly realized this was sacrificing ease of deployment. Every model update required deploying the server and restarting the entire cluster, which took time because the servers had to load the new model.

Despite these problems, it worked. Sharing the model input parser and transformer code solved the first problem. Treating the model as a black box and mocking it made building integration tests around it easier. These tests would ensure there were no unexpected misalignments between the server and model — as long as the mocks were accurate. Now, there are model interfaces that can ensure mock correctness during development. Deployment and version control for models is not a problem requiring novel solutions for most use cases nowadays.

On the other hand, the latency and availability issues eventually pushed us to deploy the model on the app at the edge. This had the benefit of removing network latency, using the phone’s much faster CPU, and keeping the app running in the background, which solved our startup latency problem and lowered our hosting costs. But of course, there were new problems.

Where to put the model?

We spent a great deal of time debating where to put the model. We wanted the speed and certainty of the phone but craved the security and ease of deployment of the server. Initially, putting the model on the server was attractive:

- It was easy to update and deploy.

- It did not limit the number of users — at the time, only certain versions of Android supported the TensorFlow inference libraries, so putting the model on the phone meant fewer users.

- It offered greater optionality — the server’s API could be used by smart speakers, websites, and baby monitors.

- Didn’t have to worry about anyone stealing the model.

But the downsides kept piling up:

- It was slow; uploading the recordings, transcribing them, and then running the model took a long time.

- The risk of an outage made the app less compelling — our users needed 100% availability; the most attractive thing about the app was that it was there for you when your spouse, parents, etc., were not — it was there for you when you were alone with an inscrutable crying child.

- It was expensive; the machines required to do all this work were expensive for a startup that needed to use Google Cloud credits to pay for everything.

In the end, we decided to put the model in the app due to the first two points — the app was not compelling if you had to wait for it to translate or if it was occasionally down. A few seconds of latency seems like an eternity when you have a crying newborn in your arms. But putting the model on the phone had its issues, as I will describe in the next section.

The lesson I took away from this is that you should optimize for the best user experience — even if the solution is not scalable, doesn’t give you future growth, or risks your IP. Companies live and die by their user experience, especially if they are new companies that need to earn trust and build a brand.

Tales From the Edge

Putting the model on the phone came with a mountain of technical difficulties. Some of them don’t exist now, but most do. We were at the cutting edge. TensorFlow for Android had barely come out — the public release was in February 2017, and I was building the app in March. But with perseverance and plenty of help from Pete Warden at TensorFlow, we managed to get the model working in the app.

The first problem was getting the model in the APK package. At the time, Android had a 50 MB APK limit and an extension feature that allowed apps to be larger by downloading components after installation. However, I deemed the extension feature insecure since it meant exposing the model on a server. So, we decided to quantize the app — a technique that involves reducing the accuracy of all inputs and outputs of every layer.

In our case, we converted all the floating point numbers into integers, which significantly reduced the size of the model and made it faster, but it also reduced the accuracy. Chris went through several iterations of the model with different quantization schemes and finally settled on integer quantization despite the reduction in accuracy. It was useful as long as the model performed better than new parents. Bonus: it solved the update issue — downloading hundreds of MB of data every model update was steep ask on metered internet connections, but a few MBs were manageable (this seems silly to me now since people regularly download GBs of video content on their phones today).

Then came a long tail of minor problems. The biggest one was that the FFT libraries that were supported by the different versions of Java shipped with Android did not produce the same spectrogram the model was trained on. We trained the model using a C++ FFT library, and it produced spectrograms with different colors and dimensions. Being secretive tools, both the C++ and Java versions were written opaquely and were hard to modify. Another quick decision: we decided to retrain the model using the Java FFT spectrograms. This meant transforming all the audio files into spectrograms and then running the training process, which took days on my friend’s old Macbook. But the problem was solved, and I could focus on something else during that time.

But the app kept freezing and crashing. Turns out that loading the recording into memory and then creating the spectrogram in memory was a bad idea. The app was hogging a lot of the phone’s resources. The solution was to save to disk and stream. Saving to disk was easy; the streaming part was hard because, once the audio left memory, anything could happen to it on the disk. Another program can read and modify it; it can be deleted, it can fail to save, etc.… These edge cases don’t generally exist on the web — you have an isolated channel with your user but not on devices. Making sure there was enough space to do this in the first place and cleaning up after the app was an unexpected challenge. It is an ill-found assumption, I later found out, to believe that if the phone had enough memory to install your app, then it had enough memory to run it.

Lastly, the device itself was an adversary— Android phones come with a wide variety of CPUs, amounts of memory, and, most importantly, microphones. The CPU and memory issue could be solved by setting the required Android version to the latest and hoping for the best; the worst case scenario was a slow app — there is no way to set resource requirements in Android directly. The real problem was accounting for the various qualities in microphones — Android does let you require microphones on the devices your app is installed on, but not the type of microphone.

I didn’t try to solve this problem. I had many tester phones at the time and noticed how much worse the predictions were on the cheap phones. I decided that there was no time to find a solution now — we were trying to launch as soon as possible. Sometimes, the best solution to a problem is to make a note of it and move on. Now I realize this issue pointed us in the direction of the final product — Homer, a smart speaker.

Once the technical difficulties were overcome (or avoided), we moved on to the harder problem of prediction quality. Since the model lived on the app, we were blind to its performance. If it performed badly, the only way we could tell is through comments on the app page or uninstalls; by then, it is too late. Therefore, building a wrapper of logs, monitoring, data collection, and feedback around the model was critical.

One or Many?

The model took spectrograms and a few other bits of information to categorize the sounds the baby was making — hungry, uncomfortable, in pain, sleepy, and gassy, these were the translations. It returned a list of all the possible categories, with each category having a percentage representing how confident the model was in that particular translation. All the percentages add up to 100 — if the most accurate translation was hungry at 90%, then the other five categories would have accuracies that add up to 10%.

The question we faced early on was whether to display all the translations (ordered from most accurate to least) or just one. I opted to show just one because I didn’t want to confuse people with more translations, and it was simpler to build this (laziness is a cornerstone of Agile). However, we ran into issues with our first set of users.

When the model was certain (above 80%), the suggestion worked—we did not get complaints from parents. But it wasn’t always that confident. When the translation accuracy was below 80%, then it was as good as the parent or guessing. This defeats the app's purpose; it should offer more accurate translations than a new parent’s panicked attempts to soothe their child. And it did, not surprisingly the 2nd or 3rd translations were spot-on. We found this out through in-person interviews — in-person interviews are unscalable and time-consuming but extremely information-dense; if you can do one daily, do it. The baby would cry, and the app would show the wrong translation with low confidence, but we would try the other translations (we saw them in the logs), and they would work.

This was counterintuitive to me because I thought of the app as a translator; there should only be one way to translate something, right? Wrong. The app was an exploration tool for new parents and caretakers to determine what they should try first. Should I try to burp my baby? Is he hungry? When was the last time he took a nap? Every parent goes through this checklist when their baby starts to cry. Our app sped up this exploration; that’s how parents used it, and that’s the feedback we got once we rolled out multiple translations. This wasn’t the killer feature, but it certainly turned the app from a gimmick to a daily-use tool, a trusted companion when the spouse is gone or grandparents are asleep.

Backed by this data, we quickly decided to display more translations. The trick was figuring out how many. Displaying all the translations—all five categories—made the app look like it repeated the same translations every time. What would you think if Google showed you the same four restaurants every time you searched for food? We decided on the top three translations — this part art and part data.

People are inclined to the number three for some reason. The data showed that the model’s confidence in other translations dropped significantly after the third translation. If the first translation had a confidence of 70%, the 2nd one would have 20%, the third 9%, and the rest below 1%. So, we dropped these translations. There was a small chance these dropped translations were accurate, but including them risked making the app look repetitive. The choice was that 1 out of 100 users would receive translations that were all wrong, or all users would see useless translations and think the app was guessing. It was an easy choice.

Of course, with hindsight, I should have tested this using a multi-arm bandit — release five different versions of the app, one for each choice, e.g., show one translation, show two, show three, etc., and slowly migrate people onto the version of the app that had best results. The primary success metric would be how many people clicked the green checkmark (happy with the translation). But at such an early stage, I believe we made the correct decision, and, more importantly, we made the decision in the correct way. We were open-minded, we did not consider any decision as concrete (no egos here), we constantly checked in with our users, and we listened to them, even if it didn’t feel right.

Learning On the Job

Every interaction is an opportunity to improve and impress. My worry from the onset of this venture was that the model was just lucky, that there was going to be a single dad or mom who was told their baby was crying because they’re gassy and they burp their baby to death. Unlikely, but we felt responsible for the well-being of each user’s baby. So, we delayed the launch by adding a feedback feature to the app.

After every translation, you were asked how helpful it was — an unskippable page. Then, the app uploaded the user feedback, output of the model, device type, and spectrogram. Initially, I uploaded the audio file but quickly realized that the microphone captured a lot of background noise. For our users' privacy, we choose to upload only the spectrogram. What I didn’t know then was that you can reverse a spectrogram and reconstruct the audio, albeit with poorer quality and with some difficulty. This was our flywheel.

However, I am skipping over the debates and multiple drafts that led to this final version. Deciding what data to collect, how to measure success, and how to define success is hard, but it is an iterative process that you can do in small steps and improve on. The best advice I got was to define what success looks like or, if that is too hard, what failure looks like (for me, it is much easier to define failure than success — I never know what I want, but I am certain on what I want to avoid) and be specific. From that answer, derive the metrics you can use to judge your efforts — if failure is losing users before their baby is too old to use the app, then measure the average lifetime of the app on the user’s phone. But keep in mind that these definitions and metrics are not static — they will change. You just need to decide and stick to them until they stop working. They’ll stop working when you stop learning from them or at least when it becomes harder to learn from them. That’s the goal, to learn and improve, the rest are details.

In the AI/ML age, a company’s most valuable digital asset is the data it is entrusted with, not the model. Growing this data — preferably in a transparent and ethical fashion — is critical to continued success. Hence, every user interaction should be treated as a value-generation moment regardless of the conversion rates. So it follows that every interaction should leave the user impressed and wanting to return. We built something that did wow and inspire; we had a great designer, Teresa Ibarra, who took my crude draft and polished it into an app that was soothing and joyful to use. And every translation made the next one better.

The End

The last thing we built was Homer. After conducting dozens of in-person interviews with app users and calling even more people, we realized that looking for your phone, unlocking the screen, finding our app, opening it, and clicking translate while holding your crying child is hard and inconvenient. Why did it take us so long to realize this? We were two single, childless guys in their twenties — I can’t remember the last time I held a baby, let alone soothed its wails. So, we decided to build a baby monitor using a smart speaker. I ordered a kit from Raspberry Pi and built a custom Google Home speaker with a big blue button on top.

Chris had a great vision of selling the model to baby monitor companies, but we were not gaining traction with these companies, so why not build our own baby monitor using a smart speaker? After building the Google Home, I created a bash script to run the translator on startup. The translator used Google Home’s SDK to translate on the trigger phrase ‘Okay, Google, translate’. The translator was a Python script that read audio from the microphones, turned it into a spectrogram, and then sent it to a server to be translated. I kept things agile, and it worked! We finally had our killer app, but it didn’t save the company.

We ran out of funding — the prize money we had won from a competition. Life was quickly barreling down on both of us. We graduated college, and we both left for home — I accepted a job in the Bay Area, and Chris left to tend to his sick mother in Texas. The startup failed.

Startups are hard and heartbreaking. You can’t do them part-time. Everyone with a stake needs to be full-time or needs to leave to make space for someone else. Or you have to shrink your ambitions, maybe abandon them completely. Now, there are problems out there that can be solved profitably on a part-time basis — but are they worthwhile? Do they excite you?

If I learned anything — besides what a pain it is to deploy ML models on Android — it is that you really need to care about the problem you are solving. You need to find the courage to commit to it full-time despite the financial risks and tremendous opportunity cost. There was no way I was going to give up my tech job to work on this. I loved helping new parents and desperate dads; the technology was exhilarating to learn and build, but how could I explain to my dad why I was moving back to our cramped Section 8 apartment after graduating? How could I turn down a six-figure salary after living on Welfare for so long?

What about venture capital? Why didn’t you try to raise money? The reality is that VCs only pick up the phone if they know you, the school you went to, or the company you worked at. They don’t care what you built — short of it being profitable, your innovation is a minor detail — VCs invest in founders and teams first and products last. But most of them don’t know how to pick good founders or teams, so they let the admissions officers at elite schools or FAANG do that.