Authors:

(1) Ken Hasselmann, ECAM Brussels School of engineering, Brussels, Belgium and Universite libre de Bruxelles, Brussels, Belgium;

(2) Quentin Lurkin, ECAM Brussels School of engineering, Brussels, Belgium.

Table of Links

2. Course design

This section details the course design, i.e., the structure and organisation, both for lectures and practical sessions.

2.1. Audience

This course is designed for second-year engineering students enrolled in a three-year bachelor’s program, which is usually followed by a two-year master’s degree program. It is taught to students that chose to have a pre-specialization in computer science and electronics. It is one of the courses that gives students a grasp of the computer science specialization and therefore plays an important role in the students’ decisions when later selecting a specialization subject for their studies.

2.2. Lecture topics

Being a course intended both as an introduction to some specific programming paradigms in python and as an introduction to AI for games, the lecture topics are divided into three main parts.

The first part of the lectures focuses on programming paradigms. The lectures cover mainly: (i) network programming, where students learn about the basics of networking and protocols in order to exchange messages on a local network programmatically; and (ii) concurrent programming, where they learn about threads and how to handle multiple concurrent computations.

The second part of the lectures focuses on classical AI for board games, namely: (i) basic data structures, such as stacks, heaps, and trees; (ii) search algorithms, such as breadth-first search, depth-first search, The Dijkstra algorithm, and A search; and (iii) adversarial search algorithms, such as the minimax algorithm, negamax, alpha-beta pruning, and iterative deepening.

The third part of the course presents the basic concepts of source code management with the use of git. In this part, the main concepts of git and its most common commands are presented, and students also learn about the importance of unit testing, how to implement good unit tests, and the concept of code coverage.

2.3. Project

In order to motivate students to grasp all the concepts described in the previous section, we ask them to build an AI agent for a board game. The board game to be tackled in the project changes every year. This year, the board game was othello—see Fig.1.

We ask the students to form groups of two. The project then consists in coming up with a good strategy to play the game, implementing it, and letting it compete in the final tournament at the end of the semester. We provide the students with a simple description of how to connect and use the tournament system (see Section 2.4 for a full description of this system).

We observed that typically students first take some time understanding the rules of the game, then starting to prototype strategies on paper, before they start to code. We ask them to code their AI agents in python. We do not provide any specific instructions on what algorithm to use. We want students to experiment using different data structures and AI techniques to try and find a suitable one.

2.4. Tournament system

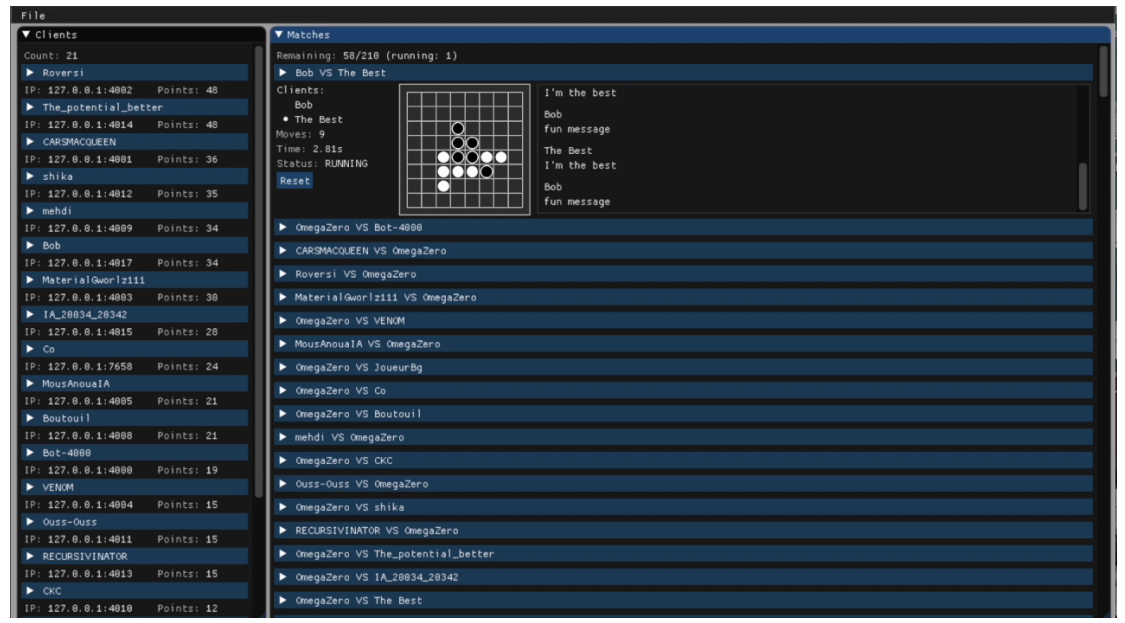

The tournament system works as follows: a game server hosts the game and agents (clients) connect to this server to start a game and make a move on the board. The game server works by accepting direct TCP connection from agents with a documented custom protocol.

Students have to build agents to connect to this server and play the game. Automatic matchmaking is implemented into the game server so that every client that is connected plays a game against all other clients that are connected. The game server waits for connections from agents. Once at least two agents are connected, the matchmaking starts and the first game is launched. The game server saves the state of the current game being played and queues all other games to be played. Once a game is started, an agent has 5 s to make a move at each turn, if the agent does not send an instruction within these 5 s, or if the move made by the agent is illegal, the game server registers a bad move, and the opposing agent takes a turn. At the end of the game, the full log of the game is saved, including bad moves and the winning agent.

During practical sessions, the game server runs on a teacher’s computer so that students can test their agents in the local network of the classroom. A random agent—an agent that plays a random move on the game board every turn—is constantly connected to the game server during the practical sessions so that any student agent connecting to the server automatically competes against it. This allows students to test their agents in a simple scenario. All games being played on the server are displayed on the server’s GUI (see Fig. 2).

We also provide the students with the code of the game server. This allows them to review and inspect code written by an external person, and helps them in their own implementation. They can also run the game server locally on their own computer to, for example, test their agents at home. We wish students to carefully read the description of the game server, understand how the system works, and propose an implementation for their agent to connect and use the custom network protocol.

The playing system is thus designed to enforce that students

use all notions seen in the lectures, if they want to succeed in connecting and playing the game.

2.5. AI agents

For the creation of the AI agent, we do not provide any boilerplate implementation, nor do we provide the implementation of the random agent. Students have access to the descriptions of classical algorithms presented during the lectures (see section 2.2) and, of course, to any external resources they could find. We do not enforce any specifications on the algorithms to be used. We want students to experiment with different data structures and AI techniques to try and find a suitable one.

A typical implementation of an agent includes: (i) a network API for communicating with the game server; (ii) a model of the game’s rules to determine possible moves given a game board; (iii) an algorithm for determining the next move to be played.

The students do have complete freedom on the AI algorithm to use for determining the next move. Our minimal expectation for this part is that student model the game using a tree of possible actions and implement a search algorithm for navigating the tree to find a move. We choose games with a relatively high branching factor on purpose so that exhaustive search is impractical. A highly effective implementation might, for instance, employ Monte-Carlo tree search with a custom-trained heuristic.

2.6. Evaluation

Students are evaluated on different aspects and at different stages during the course. The first part of the evaluation consists of individual programming exercises done in class, during a one hour exam period. Students complete four programming exercises with precise specifications. For this exam, they only have access to a computer, the lecture materials, and the latest version of the python documentation. The programs produced by the students are then individually tested. The students’ scores depend on whether their code passes all unit tests.

The second part of the evaluation is on the creation of the board game AI agent. Each group of students has to submit their work using an online git repository. Repositories are then fetched and all agents from all students compete against each other in an online tournament. In this part, students are evaluated on a competition among AI agents from all student groups, but also on the quality of their code, the quality of their unit tests, the coverage of those tests, and how they managed their source code in git. Since the proposed board game is different every year, the difficulty of the task of creating the AI agent can vary slightly. However, we attempt to minimize this by selecting board games with a similar degree of complexity, or by qualitatively adjusting our level of expectation for the project.

Each of the two parts (the individual programming exercise and the group project) accounts for 50% for the final course score. In this way, we evaluate the individual students’ understanding of the lecture topics as well as the quality of their group projects, in terms of source code quality, code efficiency, and source code management.

2.7. Gamification components

Motivation is a key factor in student performance and engagement in a course (Saeed & Zyngier, 2012). Several studies report that the use of gamification is an efficient way of motivating students (Seaborn & Fels, 2015; Shahid et al., 2019; Jawad & Tout, 2021). We chose to base our course on competition-based learning, a certain way of implementing gamification in which students take part in a friendly competition. Competition-based learning has been shown to improve motivation and help increase student performance (Burguillo, 2010; Ho et al., 2022). Several studies describe its usage in computer science courses (Lawrence, 2004; Ebner & Holzinger, 2007; Ribeiro et al., 2009; Jung & Shim, 2010) and report satisfactory results in student engagement and motivation. It can also be used in conjunction with project-based learning to encourage peer-learning among students (Boss & Krauss, 2007; Burguillo, 2010; Ho et al., 2022). As described in Section 2.6, we evaluate students on multiple criteria. We designed the evaluation score awarded to the project to be in several parts: 35% of the score is awarded based on the use of git, code coverage, and software documentation; another 35% of the score is awarded based on software engineering practices, naming conventions, comments, and bare minimum functionalities; the remaining 30% depends on the students’ performance during the final tournament. The performance of the students in the tournament (the aforementioned 30%) is computed based on: (i) their agent ranking better than the random agent; (ii) the number of bad moves that their agent tried to play; (iii) the overall rank of the agent. Since the project score accounts for 50% of the final course grade, the tournament performance only accounts for 15% of the final grade of the student.

There are two frameworks for introducing competition in education. In competitive-based learning, the learning result depends on the result of the competition itself. In competition-based learning, by contrast, the learning result is independent of the student’s score in the competition (Burguillo, 2010). In our case, the different parts introduced in the grading system make the 85% of the final score independent from the competition. The learning result is thus also independent from the competition since, the course’s skills are diverse, and a good final score can be achieved with a well-designed but relatively simple AI agent.

It has been shown that is some cases, competitive goal structures can impede achievement, and that cooperation, or cooperation with intergroup competition, is more effective than interpersonal competition (Johnson et al., 1981). That is why we designed the project as a group work, and also why we decided on the relatively small percentage devoted to the final competition: to encourage so-called friendly competition, where students are motivated to engage in the competition seriously without jeopardizing peer-based learning.

This paper is available on arxiv under CC BY 4.0 DEED license.