Authors:

(1) Sasun Hambardzumyan, Activeloop, Mountain View, CA, USA;

(2) Abhinav Tuli, Activeloop, Mountain View, CA, USA;

(3) Levon Ghukasyan, Activeloop, Mountain View, CA, USA;

(4) Fariz Rahman, Activeloop, Mountain View, CA, USA;.

(5) Hrant Topchyan, Activeloop, Mountain View, CA, USA;

(6) David Isayan, Activeloop, Mountain View, CA, USA;

(7) Mark McQuade, Activeloop, Mountain View, CA, USA;

(8) Mikayel Harutyunyan, Activeloop, Mountain View, CA, USA;

(9) Tatevik Hakobyan, Activeloop, Mountain View, CA, USA;

(10) Ivo Stranic, Activeloop, Mountain View, CA, USA;

(11) Davit Buniatyan, Activeloop, Mountain View, CA, USA.

Table of Links

- Abstract and Intro

- Current Challenges

- Tensor Storage Format

- Deep Lake System Overview

- Machine Learning Use Cases

- Performance Benchmarks

- Discussion and Limitations

- Related Work

- Conclusions, Acknowledgement, and References

6. PERFORMANCE BENCHMARKS

In this section, we experimentally demonstrate Deep Lake’s performance at scale from the point of ingestion into the format up to training at scale against other dataloaders and formats. We compare streaming datasets from different storage backends, and showcase performance gains and scalability while training on the cloud.

6.1 Ingestion speed to various formats

![Figure 6: Ingesting 10,00 0 images from FFHQ [43] datasetinto different format (lower better)](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-lr83ume.png)

10,000 images from FFHQ [43] dataset were uncompressed and stored in NumPy format. Each 1024x1024x3 raw image is a 3MB array. Then, as shown in Fig. 6 images were serially written into each format. To increase the performance, we used TensorStore [23] to write to Zarr [52] and N5 [24] formats. The experiments were done on the AWS c5.9xlarge machine. Deep Lake achieves significantly faster write performance compared to array formats and on par with binary formats such as WebDataset [19] and FFCV Beton [39]

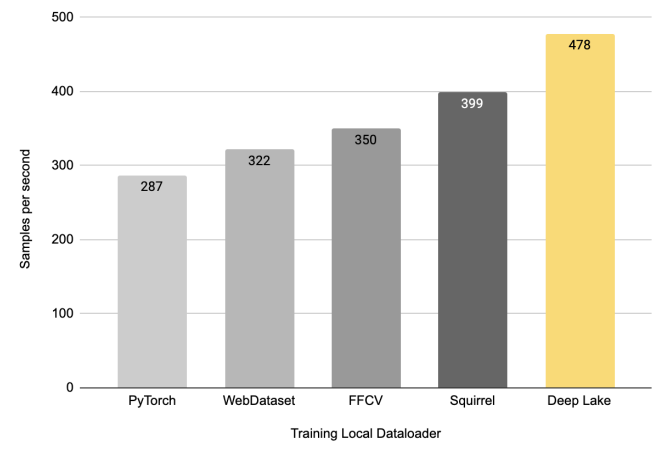

6.2 Comparison of local dataloaders

As shown in Fig. 7 Deep Lake achieves faster data loading in a PyTorch training loop without a model. The experiment was carried out on AWS P3.2xlarge instance with one Nvidia V100 GPU

card. The dataset has randomly generated 50,000 250x250x3 images stored as JPEG files. The list of libraries in which the benchmarks were carried out was Deep Lake, FFCV [39], Squirrel [75], Webdataset [19] and native PyTorch dataloader [58].

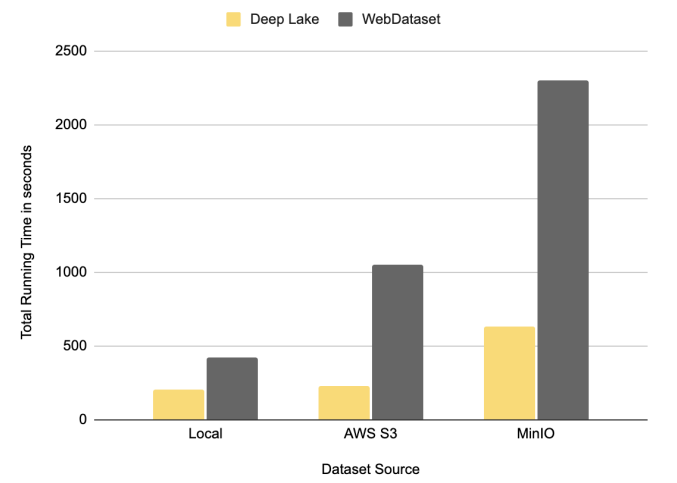

6.3 Streamable dataloader from different locations

In this experiment as shown in Fig. 8, we explore different storage backends for remote streaming using the same dataset as in Section 6.2. MinIO [17] is running on another machine in a local network. Notably, Deep Lake achieves similar performance as if the data is local to the machine compared to AWS S3. Both WebDataset and Deep Lake are significantly slower while streaming the data from

MinIO compared to AWS S3. For more detailed dataloader benchmarks, we would recommend an exhaustive dataloader overview study by Ofeidis et al. [54].

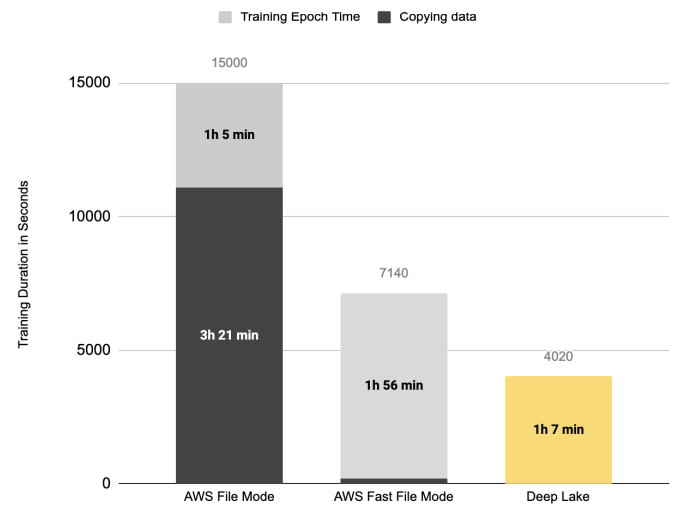

6.4 ImageNet training on the cloud

Since Deep Lake was built to be cloud-first, in this and next section we demonstrate the benefits it provides for training models on the cloud. We take ImageNet dataset [35] and store it on AWS S3 [1] as original and Tensor Storage Format. The dataset contains 1.2 million images and labels in total 150GB. Deep Lake achieves virtually similar training performance as if the data were local to the machine. This saves up to 4x GPU compute time and cost as shown in Fig. 9

6.5 Distributed training of a large multi-modal dataset

As a second experiment, we take LAION dataset [67] containing 400M image-text pairs and train CLIP [60], image-text embedding model with 1 billion parameters. The original dataset is a table of Parquet files with a column of image URLs. The dataset download from the source took 100 hours, while ingestion to Tensor Storage format took only 6 hours, totaling 1.9TB in size. The dataset has been stored on AWS in the US-east region while training GPU machine in the US-central region. As shown on Fig. 10 Deep Lake achieves high GPU utilization by streaming 5,100 images/s into 16 Nvidia A100 GPUs while without model up to 80,000 images/s per machine on the same region.

This paper is available on arxiv under CC 4.0 license.