This paper is available on arxiv under CC 4.0 license.

Authors:

(1) Muhammed Yusuf Kocyigit, Boston University;

(2) Anietie Andy, University of Pennsylvania;

(3) Derry Wijaya, Boston University.

Table of Links

- Abstract and Intro

- Related Works

- Data

- Method

- Analysis and Results

- Conclusion

- Limitations

- Ethics Statement and References

- Appendix: Toxicity Measurement

- Appendix: Correlation Over Time

- Appendix: Wikidata

- Appendix: Hyperparameter Sensitivity

Related Works

Two primary aspects distinguish works in this area from each other: how the context is selected for each group/community and how the trained embeddings on this chosen text are used. Below we will discuss prior works based on how they use their learned embeddings as well as how they differentiate the context for each group. We use the phrase ”context” to refer to words that co-occur with a specific group/- community in the corpus. These words are utilized to train the group embeddings and to analyze the portrayal of this group.

Word Embeddings Usage

Word embeddings have been used for detecting larger-scale semantic and cultural shifts. Martinc, Kralj Novak, and Pollak (2020) focused on identifying short-term cultural changes in public discourse. Kozlowski, Taddy, and Evans (2019) analyzed cultural variations in a more extended period, while Hamilton, Leskovec, and Jurafsky (2016); Wijaya and Yeniterzi (2011) looked into understanding semantic shifts and how the meaning of words have changed over long periods of time.

Previous works have also used word embeddings to analyze social dynamics towards or within a specific community. Farrell et al. (2020) used word embeddings to identify novel jargon generated by the online community manosphere. Tripodi et al. (2019) tracked antisemitic sentiment over 12 decades in documents they acquired from Bibliotheque Nationale de France ` using words that refer to Jewish People to extract relevant text and generate a semantic space. Xu et al. (2019) analyzed movie scripts and books to identify gender representations in narratives. Lucy, Tadimeti, and Bamman (2022) used semantic axes, a bipolar collection of adjectives that represent an antonymous relationship (An, Kwak, and Ahn 2018) and gendered words to analyze how behavior towards women has changed in different stages of the online manosphere community. They show how clustered terminology coincides with the opening of various subreddits and analyze these clusters on different semantic axes. Garg et al. (2018) used word embeddings in a larger-scale work to analyze ethnic and gender stereotypes as well as how these stereotypes changed over time. Additionally, they combined the findings with demographic and occupation data to offer a more coherent narrative.

Context Differentiation

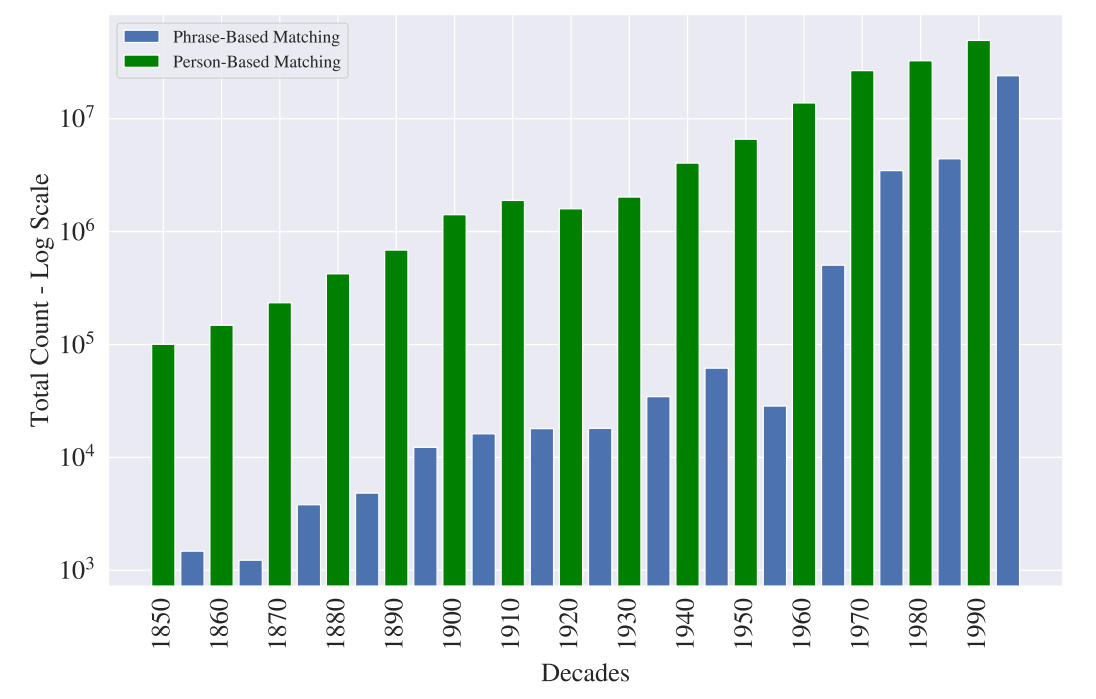

Researchers use several methods to filter the context of the target group and differentiate it from other groups with which we will compare the target group. Bolukbasi et al. (2016) used demographically gendered names to identify the context that is specific to one gender. An, Kwak, and Ahn (2018) used a curated list of words that referred to Jewish People and filtered the relevant text. Lucy, Tadimeti, and Bamman (2022) took this core idea one step further and trained a gender classifier on a predetermined list of phrases and tried to find other keywords for filtering relevant context. Kozlowski, Taddy, and Evans (2019) searched for keywords in dictionaries and thesauruses and observed that larger keyword sets yield more reliable results. While many of these methods have yielded meaningful results, we suggest that filtering based on referring words or demographically gendered or racial names is an approximation.. We propose using names of actual people in complete form and only extracting context applicable to them. If applied at enough scale, this method will yield more precise measurements of implicit bias compared to previous methods.

Additionally, when people use referring phrases, the expression is explicitly general; and biases are generally filtered when making general statements about a group. Changes in laws, such as the Civil Rights Act, and social codes have served to suppress overt expressions of grouplevel bias. However, when talking about individuals, the group context is not explicit. People can have implicit biases toward a group that they project on the majority of individuals from that group that they are unaware of, or filter when making general statements about the group. Hence the collection of context around individual references is a better manifestation of implicit biases.